We’re here with Gregory Kramida to talk about his Kinect group projects at the University of Maryland.

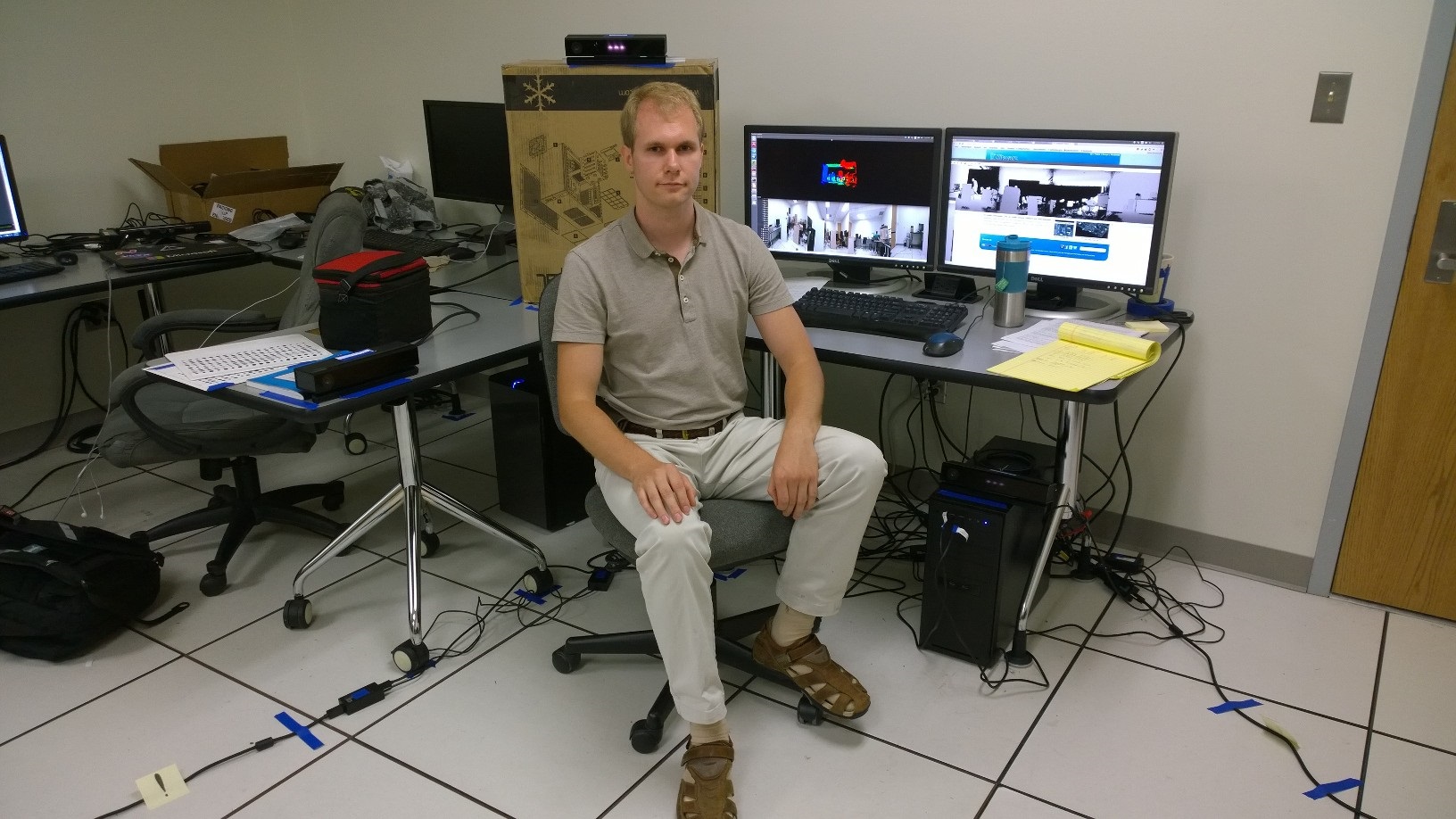

Gregory Kramida at UMD

1. Greg, tell us a little bit about yourself and your team.

I have received a BA in Graphic Design, and a BS and MS in Computer Science (CS) from the University of Maryland. Currently, I am a PhD student in CS at the same University. My team consists of four very talented young individuals and myself.

We currently have two other CS PhD students, one Physics Masters student, and one Computer Engineering undergraduate student. Neither one of us is fully finished with his educational goals, and some of us are still finidng our true calling, so we are forced to collaborate in an ad-hoc fashion.

Whenever any of us has the time and energy, they contribute. Hence, I am the only “permanent” member, and we are allways looking for like-minded, driven, and talented individuals.

2. How did you get started with Kinect development?

I first started working with the Kinect One (for XBox 360) back in 2013. My very first project with the Kinect One involved analysis of basic human motion and behavior using the Kinect SDK V1 for Windows. It was done for a seminar course in Computer Vision and served as an impetus later for the current project involving multiple Kinect 2 time-of-flight cameras for reconstruction of dynamic scenes.

In the interim, I have toyed with related 3D reconsturuction using high-resolution high-framerate passive-light cameras with custom lenses. Meanwhile, other team members were exposed to the Kinects in varions settings, some in academic endeavors, others in hackathons.

3. What programming languages and libraries/utilities are you using?

For interface with the Kinect V2, we are using the freenect2 driver, which can be deployed in both Windows and *-nix-like environments (Mac OS X included). We are collaborating with the Autonomous Robotics and Persception Group (ARPG) at the University of Colorado in maintaining a custom middleware stack.

The ARPG stack helps us easily record Kinect data streams to disk, as well as to perform capture and calibration of multiple Kinects in distributed settings. This same middleware layer will possibly help us to integrate the system with other sensors working alongside the Kinects in the future.

Finally, we use the Visualization Toolkit (VTK) for rendering tasks, the Qt Toolkit for interface, and the Point Cloud Library (PCL), which has multiple extremely useful 3D reconstruction utilities. We also set up an easy way to export all of our routines to the Python scripting language, aiming to provide, so-to-speak, a “digital playground” for scientists and innovators who would like to easily use our tools without writing “heavy-weight” C++ applications.

4. What kind of challenges and limitations have you faced? How did you overcome them?

Since a lot of our project infrastructure relies heavily on very raw third-party code that is in active development, we actually had to contribute to these projects, fixing various bugs, to get everything working both close to the metal and in the higher-level task.

Teamwise, we also went through some organizational difficulties. We incidentally met each other only around the beginning of summer: just when everyone already had their summer plans. Hence, we’ve had to take into account each other’s unrelated venues and work around them. Often, this meant losing a person for months or working remotely.

5. How many Kinect sensors are you using from a single application? Can you more details about your configuration/setup?

We are currently using four Kinect V2 devices simultaneously, hooked to a single machine, and testing an alternative distributed configuration with five Kinect V2 devices split between three machines. In our current setup, the Kinects are static and are oriented around a scene involving both static and dynamic objects.

In the future, we plan to extend the framework to support sensor motion, i.e. the kinects will be moving amongst other moving objects which they capture.

6. What are the practical applications of the work you’ve done so far? What is the future direction of your projects?

The framework we have built so far, which we call “reco”, allows us to easily calilbrate and capture data from multiple Kinect V2 devices simultaneously, as well as fuse the captured datastreams into 3D models on a per-frame basis using PCL. In the future, we plan to integrate frame-to-frame 3D model mapping, which will improve integrity of the resulting model, reduce occlusion, and eliminate collisions. We plan to advance the existing mapping methods as necessary to provide a better viewing experience.

Eventually, we will target 3D content generation for re-display in VR and AR devices. Therefore, the quality of the reconstructed world must be on a very high level. We also want to provide tools for editing of the captured data, in order to provide users as much artistic freedom as possible when generating their own content.

7. Do you have any advice for other Kinect developers out there?

Whether you work alone or in a team, make no mistake: there will be code, and lots of it. Try not to bind yourself to a particular hardware setup or software framework. Plan in advance and make room to be versatile. There is “cheap-and-dirty”, and then there is “elegant”: make the right choice for your project before you start. Be clear with yourself on whether you want to just throw something together over a single night, or whether you want to make a maintainable, powerful tool that others can use and improve later on.

Everyone has a lot going on, but if you have decided on a project, commit, and do not lose sight of it or get distracted. Always keep in mind: if you want to do a good job, then you need to use a good tool. Do not be lazy to strategize and streamline your workflow: often, the thing that seems hard and long to set up now will save you many-fold the time and trouble later down the road. And, very importantly, never be afraid to learn new things along the way!

For those assembling/working with a team: look for energetic, driven people with flexible minds and useful experience. Beware of people with commitment issues. Inquire about their past, ask them to show you what have they actually done. Sometimes, the people who seem smartest may only turn out to be a hindrance when you really need them. Remember: talk is cheap, and even good ideas are worthless if their bearer is not ready to back them up with hard work and good code.

Once you have a team, respect and listen to them, but allocate a time for everything: there is time for discussions and planning, but most of the time is for concentrated work. Expect some level of autonomy, but do provide help to those who really need it, and, very importantly, do not be afraid to ask for help when you really need it!

Finally, the most important aspect of teamwork is honesty, which means not only being honest with other teammates, but also with yourself. Only if you are honest with yourself can you keep believing in yourself, your team, and what you are trying to give.

Pingback: Dew Drop – August 4, 2015 (#2067) | Morning Dew

Go, Greg! That is awesome!